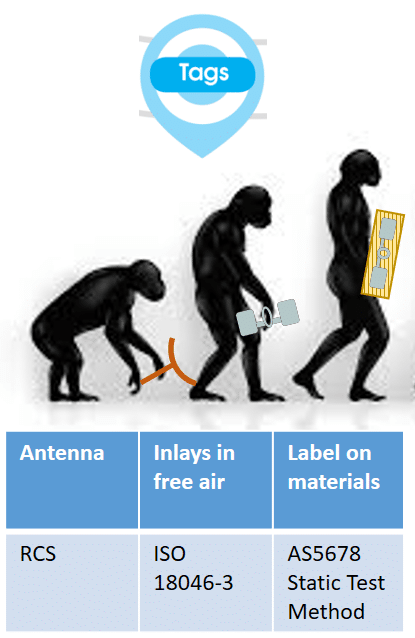

Evolution of organisms is one broadly accepted theory. Let me walk you through the phases evolution has taken when it comes to RAIN RFID tag testing.

Starting Point: The RFID Inlay

In the end of 90s there were no off-the-shelf solutions to start doing RFID research and tag testing. Hence the classical Radar Cross-section (RCS) seemed like a great way to characterize the UHF antenna of an inlay. It’s just that such a passive antenna test didn’t enable designers even to optimize the forward link: matching the impedance of IC with the impedance of the antenna. As a result, it was a struggle to get the tag tuning right. Additionally, the RCS measurement told nothing of the read range that the inlay design can deliver.

Delta Radar Cross-section (deltaRCS) was a serious step in the right direction for two reasons: the impedance match could be better analyzed and the fundamental reverse link parameters were brought into consideration. Read ranges started to improve. Around 2005-2007 also the first commercial tag test systems became available. Those systems, such as the Tag Analyzer from SAVR Communications, the Voyantic Tagformance and MeETS from CISC, already utilized the Class 1 Gen2 protocol to better grasp the actual performance of an RFID inlay. Pavel Nikitin’s paper from 2012 explains the theory and practicalities of diverse test systems in detail.

As tag prototypes were made and production samples tested, many companies focused mainly on the inlay performance in free air conditions. It didn’t take long for the first experts to realize that the test results better correlated with the real-world use case performance when the inlays were attached on various materials prior to testing. So, approaching the current decade it seemed half of the industry was busy working with various reference material sets, and the other half with aluminum plates of various sizes.

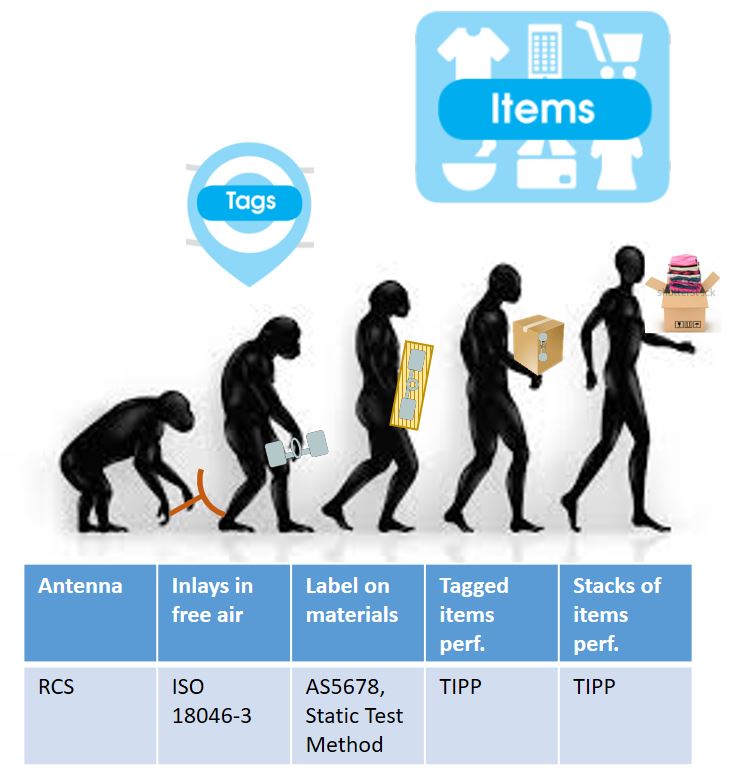

Era of Testing Tags on Items

To bring more sense into real-world performance of inlays, Voyantic introduced the Application Development Suite already in 2008. With the Population Analysis function anyone could visualize and study the behaviour and properties of tags in groups. As we have later learned, very few did such analysis before 2011, which manifests two related findings:

- The more groundbreaking the concept, the longer time it takes to really sink in

- It takes a lengthy period of time for engineers to learn how to explain certain groundbreaking concepts in an understandable way.

Tag-to-tag close coupling effects are indeed complex, and only partially understood and explained by the academic community even today. As a kind of workaround, the ARC Program emerged in 2011 to combine exhaustive label testing with data collection from actual RAIN use cases in retail. Outcome of that analysis are the ARC performance categories and the related certified inlay lists.

These ARC inlay lists simplified tag selection for the US retailers. I’d also state that the success of the ARC program pushed the technology vendors to seek additional ways to ease the adoption of RAIN RFID technology by collaboration. It can be said that the Program may have slowed down the market entry time for new inlay types and vendors obviously because they needed to pay and wait for certification tests before getting on those lists.

On the positive side waiting pays off, because the ARC inlays lists are one functional way for a new vendor to gain access to the US retail deployments.

Early this decade the performance testing elsewhere in the RFID ecosystem already focused on tags on actual items. However, the industry lacked a documented and open framework to correlate various test setups with each other. This void, together with the industry’s quest to improve the scalability of deployments, led to VILRI’s tagged item prototype project. Eventually that project gave birth to the Tagged Item Performance Protocol, aka TIPP, in 2015.

TIPP is a standard-like guideline from GS1 that establishes and combines three fundamental aspects:

- Key performance metrics for RAIN enabled items

- Test methodology that anyone can repeatedly use to extract these metrics

- Performance grades for individual and stacked items.

Among its other benefits, the open and thoroughly documented TIPP guideline enables anyone to easily communicate their tagging requirements without sharing details of their processes and use cases.

Following the TIPP approach tagging solution providers are free to innovate and offer their latest products and solutions immediately without the need to have them certified by third parties.

How Would You Like Your RAIN Enabled Items? Separate, Boxed, Stacked, Hanging…

In the fall of 2017 an update to TIPP introduces a new test protocol for dense hanging stacks. This test protocol puts 100% reads of all the items to the focus, and thus leaves the close coupling effect purely for tagging experts to handle and solve. I anticipate that RAIN deployments especially around sporting goods retail will benefit from this new test protocol.

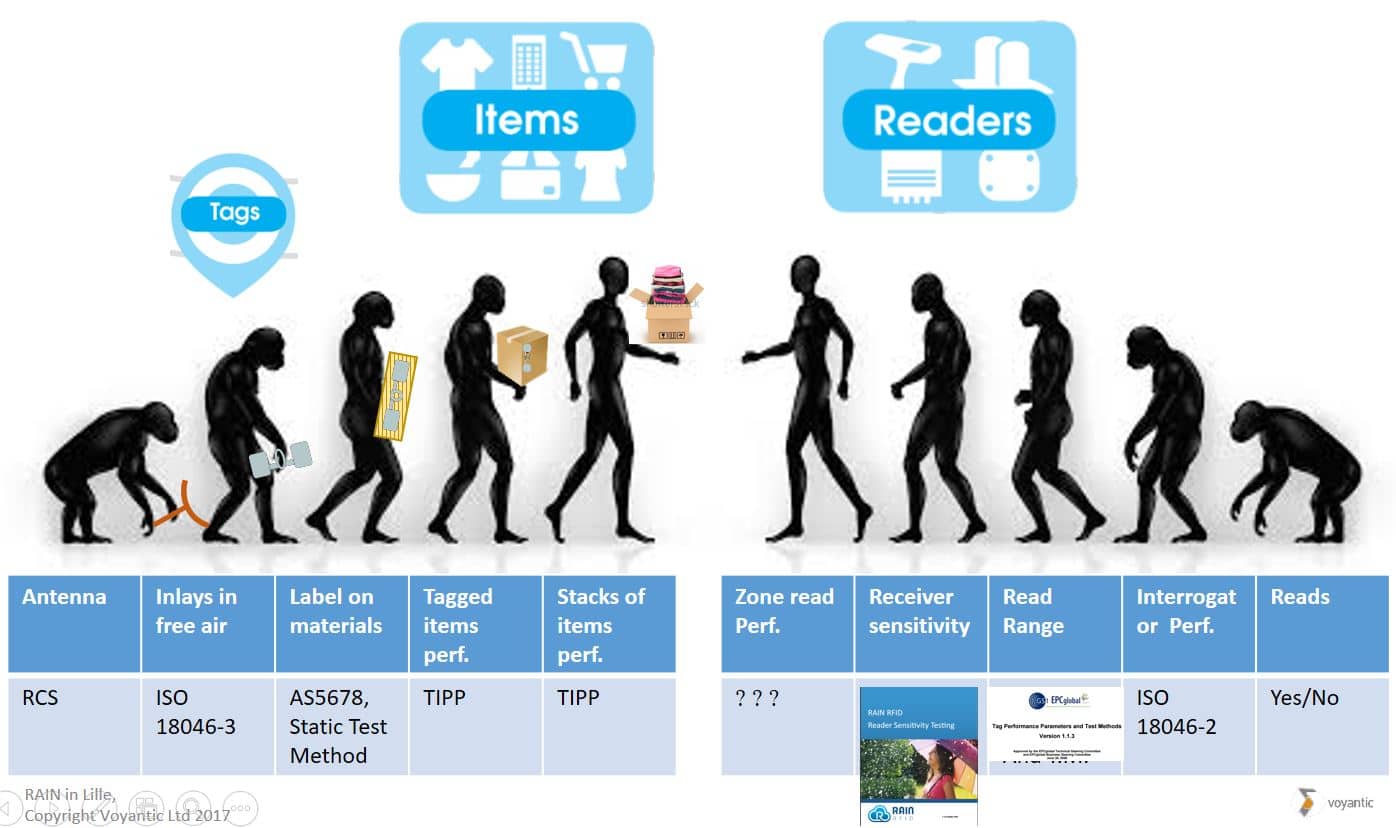

RAIN Read Performance Requires Input Also From the Reader Side

Albeit the tag side already enjoys highly sophisticated performance test framework, there are still a few missing pieces on the RAIN reader side. The Reader Sensitivity Test Recommendation from the RAIN Alliance was a grand milestone already. The dialogue and evolution would greatly speed up if the industry stakeholders, such as GS1 and RAIN Alliance, would take initiative to derive meaningful open performance metrics for read zones and readers in general.

That’s my evolution story for now. And no, the evolution of RAIN tag testing has not stalled, instead it’s constantly looking for new paths to make RAIN technology spread more efficiently. That’s also where Voyantic keeps on investing in. Your feedback on these thoughts will be greatly appreciated!

All blog posts